Hours of digging

Done in minutes

TierZero Incident Agent joins incidents as your right-hand: gathering context, surfacing what's relevant, and helping you figure out how to stop the bleeding and why it happened.

How it works

TierZero joins the incident

When an incident is raised, TierZero Incident Agent joins and starts gathering context. Tag @TierZero to delegate new investigation theories or ask for updates.

Root cause analysis

TierZero synthesizes signals across your stack — code changes, logs, traces, metrics, deploys, past incidents, runbooks — and surfaces high-signal clues to the channel.

Post-mortem, action items, Jira tickets

Auto-generated post-mortem, action items, and Jira tickets. Reduces the painful "recovery to resolution" cycle from days to hours.

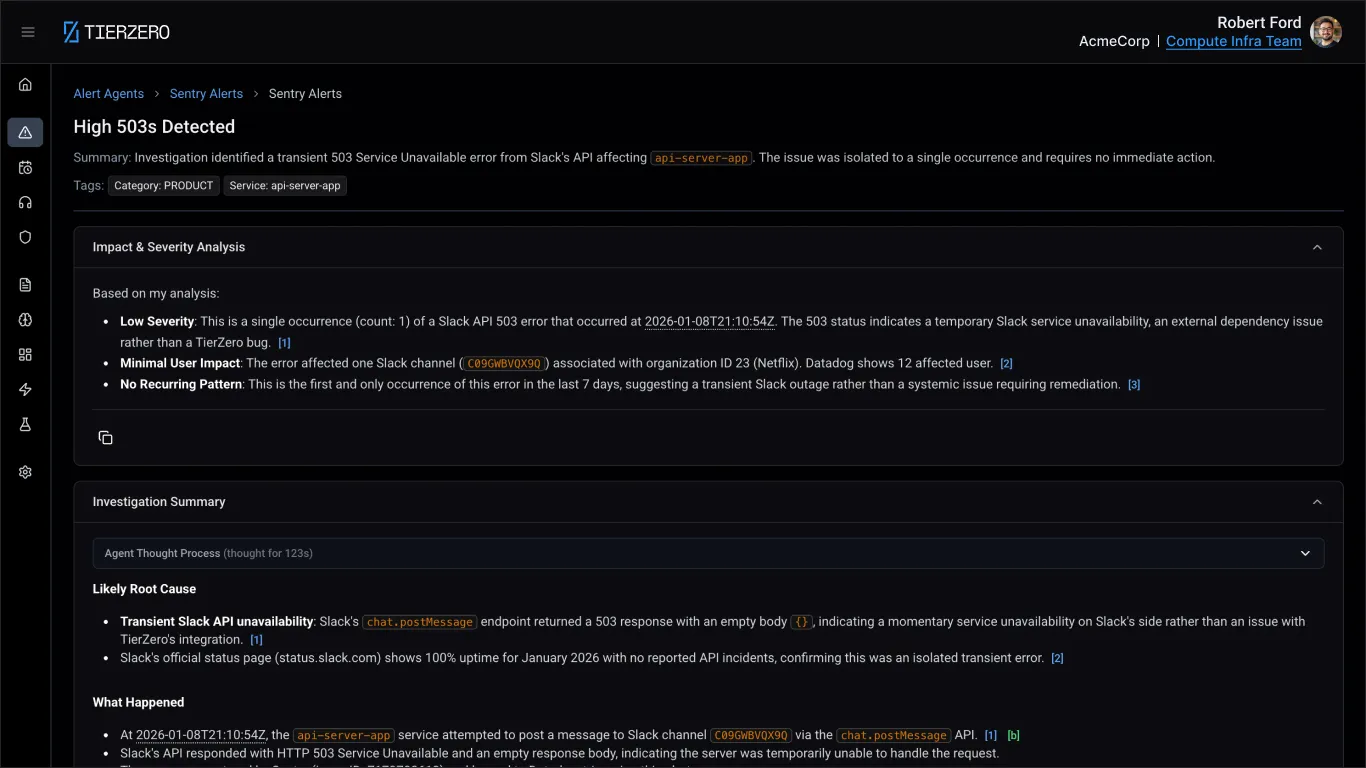

Executive Summary

Some customers are unable to complete checkout. A fix has been deployed and error rates are already dropping.

503s on checkout-api spiked at 14:32 UTC following deployment v2.14.3. The pg-bouncer connection pool is saturated and a rollback has been initiated. Downstream payment-svc is returning timeouts due to the same connection starvation. Error rate has dropped from 12.4% to 3.1% since rollback began.

Impact and Severity

About 2,300 customers have seen checkout errors in the last 47 minutes. No data loss, and failed orders can be retried once resolved. Customer support should expect elevated ticket volume for payment-related issues.

External Messaging

Suggested status page update:

“We are investigating issues with checkout and payment processing. Some orders may fail to complete. Our team has identified the cause and a fix is being deployed. No payment data has been affected. We will provide another update within 15 minutes.”

Keep stakeholders in the loop.

When your CTO, customer success, or another engineer joins an incident channel mid-flight, they don't need to ask 'what's going on?' — and no one has to stop debugging to explain.

Live dashboard

Full context, timeline, investigation findings, and charts from your observability tools.

Ask TierZero directly

Tag it anytime for the latest status or to ask specific questions.

Ephemeral Slack message

Private summary sent the moment someone joins the incident.

Post-mortems drafted before the retro starts.

After an incident, engineers get pulled back into feature work. Post-mortems get deprioritized, delayed, and sometimes never finished. TierZero generates a first draft from the signals it collected during the incident.

True incident timeline

Grounded in telemetry data collected during the incident.

Customer and service impact assessment

Scope and severity documented automatically.

Report drafted based on your template

Or standard 5-whys format.

Action items with suggested ownership

Clear next steps assigned to the right people.

Timeline

v2.14.3 rolled out to checkout-apipg-bouncer pool exhaustion from new query patternv2.14.2 initiatedRoot Cause

Deployment v2.14.3 introduced an N+1 query in the cart validation path. Each checkout request opened 12-15 new DB connections instead of 1, saturating the pg-bouncer pool within minutes. Downstream payment-svc timed out waiting for connections from the same pool. The offending commit (a3f29bc) refactored item-level discount lookups but removed the batch prefetch. This was not caught in staging because the test dataset only had single-item carts.

Impact

~2,340 customers saw checkout failures over 47 minutes. 1,847 requests returned 503s. No data loss; failed orders are retryable. Estimated revenue impact: $18.2K in delayed transactions.

Action Items

pg-bouncer configcheckout-api

The fastest path to happier customers.

Time to Clue

MTTR Reduction

of time savings per year